Note

Go to the end to download the full example code

Linear regression#

What we do in this notebook#

Here we demonstrate use of CoFI on a simple linear regression problem, where we fit a polynomial function to data, using three different algorithms:

by solution of a linear system of equations,

by optimization of a data misfit function

by Bayesian sampling of a Likelihood multiplied by a prior.

Learning outcomes#

A demonstration of running CoFI for a class of parameter fitting problem. Example of a CoFI template.

A demonstration of how CoFI may be used to experiment with different inference approaches under a common interface.

A demonstration of CoFI’s expandability in that it may be used with pre-set, or user defined, misfits, likelihood or priors.

# Environment setup (uncomment code below)

# !pip install -U cofi

Linear regression#

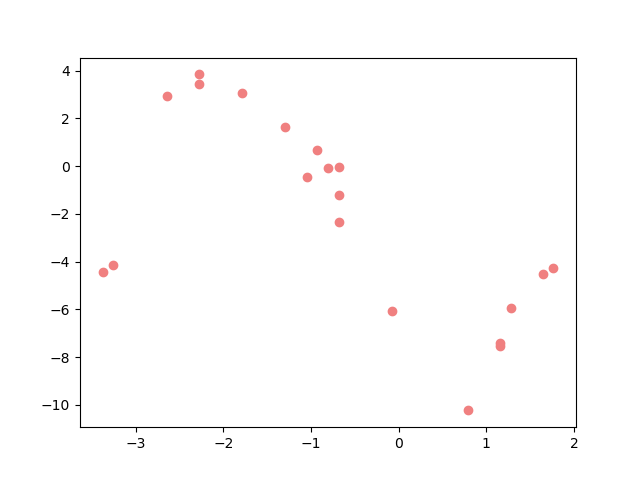

Lets start with some (x,y) data.

import numpy as np

import matplotlib.pyplot as plt

# here is some (x,y) data

data_x = np.array([1.1530612244897958, -0.07142857142857162, -1.7857142857142858,

1.6428571428571423, -2.642857142857143, -1.0510204081632653,

1.1530612244897958, -1.295918367346939, -0.806122448979592,

-2.2755102040816326, -2.2755102040816326, -0.6836734693877551,

0.7857142857142856, 1.2755102040816322, -0.6836734693877551,

-3.2551020408163267, -0.9285714285714288, -3.377551020408163,

-0.6836734693877551, 1.7653061224489797])

data_y = np.array([-7.550931153863841, -6.060810406314714, 3.080063056254076,

-4.499764131508964, 2.9462042659962333, -0.4645899453212615,

-7.43068837808917, 1.6273774547833582, -0.05922697815443567,

3.8462283231266903, 3.425851020301113, -0.05359797104829345,

-10.235538857712598, -5.929113775071286, -1.1871766078924957,

-4.124258811692425, 0.6969191559961637, -4.454022624935177,

-2.352842192972056, -4.25145590011172])

sigma = 1 # estimation on the data noise

And now lets plot the data.

Problem description#

To begin with, we will work with polynomial curves,

Here, \(M\) is the ‘order’ of the polynomial: if \(M=1\) we have a straight line with 2 parameters, if \(M=2\) it will be a quadratic with 3 parameters, and so on. The \(m_j, (j=0,\dots M)\) are the ‘model coefficients’ that we seek to constrain from the data.

For this class of problem the forward operator takes the following form:

This clearly has the required general form, \(\mathbf{d} =G{\mathbf m}\).

where:

\(\textbf{d}\) is the vector of data values, (\(y_0,y_1,\dots,y_N\));

\(\textbf{m}\) is the vector of model parameters, (\(m_0,m_1,m_2\));

\(G\) is the basis matrix (or design matrix) of this linear regression problem (also called the Jacobian matrix for this linear problem).

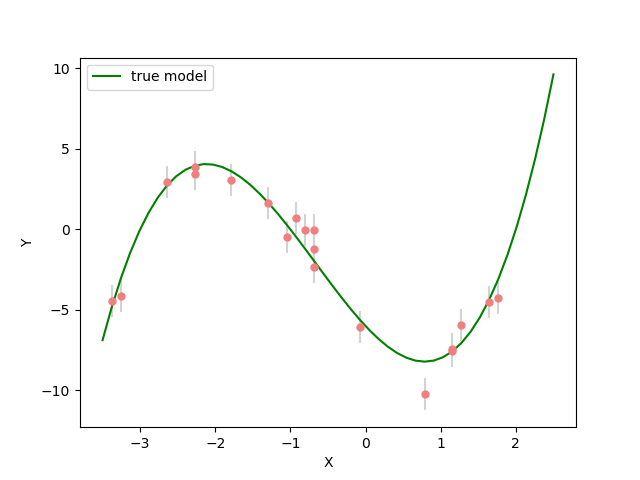

We have a set of noisy data values, \(y_i (i=0,\dots,N)\), measured at known locations, \(x_i (i=0,\dots,N)\), and wish to find the best fit degree 3 polynomial.

The function that generated our data is : \(y=-6-5x+2x^2+x^3\), and we have added Gaussian random noise, \({\cal N}(0,\sigma^2)\), with \(\sigma=1.0\).

We now build the Jacobian/G matrix for this problem and define a forward function which simply multiplies \(\mathbf m\) by \(G\).

Define the true model for later.

# True model for plotting

x = np.linspace(-3.5,2.5) # x values to plot

true_model = np.array([-6, -5, 2, 1]) # we know it for this case which will be useful later for comparison.

true_y = jacobian(x,4).dot(true_model) # y values for true curve

Now lets plot the data with the curve from the true polynomial coefficients.

# Some plotting utilities

def plot_model(x,y, label, color=None):

#x = np.linspace(-3.5,2.5)

#y = jacobian(x).dot(model)

plt.plot(x, y, color=color or "green", label=label)

plt.xlabel("X")

plt.ylabel("Y")

plt.legend()

def plot_models(models, label="Posterior samples", color="seagreen", alpha=0.1):

x = np.linspace(-3.5,2.5)

G = jacobian(x)

plt.plot(x, G.dot(models[0]), color=color, label=label, alpha=alpha)

for m in models:

plt.plot(x, G.dot(m), color=color, alpha=alpha)

plt.xlabel("X")

plt.ylabel("Y")

plt.legend()

Now we have the data and the forward model we can start to try and estimate the coefficients of the polynomial from the data.

The structure of CoFI#

In the workflow of cofi, there are three main components:

BaseProblem, InversionOptions, and Inversion.

BaseProblemdefines the inverse problem including any user supplied quantities such as data vector, number of model parameters and measure of fit between model predictions and data.inv_problem = BaseProblem() inv_problem.set_objective(some_function_here) inv_problem.set_jacobian(some_function_here) inv_problem.set_initial_model(a_starting_point) # if needed, e.g. we are solving a nonlinear problem by optimization

InversionOptionsdescribes details about how one wants to run the inversion, including the backend tool and solver-specific parameters. It is based on the concept of amethodandtool.inv_options = InversionOptions() inv_options.suggest_solving_methods() inv_options.set_solving_method("matrix solvers") inv_options.suggest_tools() inv_options.set_tool("scipy.linalg.lstsq") inv_options.summary()

Inversioncan be seen as an inversion engine that takes in the above two as information, and will produce anInversionResultupon running.inv = Inversion(inv_problem, inv_options) result = inv.run()

Internally CoFI decides the nature of the problem from the quantities set by the user and performs internal checks to ensure it has all that it needs to solve a problem.

1. Linear system solver#

from cofi import BaseProblem, InversionOptions, Inversion

Step 1. Define CoFI BaseProblem#

inv_problem = BaseProblem()

inv_problem.set_data(data_y)

inv_problem.set_jacobian(jacobian())

inv_problem.set_data_covariance_inv(Cd_inv())

Step 2. Define CoFI InversionOptions#

inv_options = InversionOptions()

Using the information supplied, we can ask CoFI to suggest some solving methods.

inv_options.suggest_solving_methods()

The following solving methods are supported:

{'sampling', 'optimization', 'matrix solvers'}

Use `suggest_tools()` to see a full list of backend tools for each method

We can ask CoFI to suggest some specific software tools as well.

inv_options.suggest_tools()

Here's a complete list of inversion tools supported by CoFI (grouped by methods):

{

"optimization": [

"scipy.optimize.minimize",

"scipy.optimize.least_squares",

"torch.optim",

"cofi.border_collie_optimization"

],

"matrix solvers": [

"scipy.linalg.lstsq",

"cofi.simple_newton"

],

"sampling": [

"emcee",

"bayesbay",

"neighpy"

]

}

inv_options.set_solving_method("matrix solvers") # lets decide to use a matrix solver.

inv_options.summary()

=============================

Summary for inversion options

=============================

Solving method: matrix solvers

Use `suggest_solving_methods()` to check available solving methods.

-----------------------------

Backend tool: `<class 'cofi.tools._scipy_lstsq.ScipyLstSq'> (by default)` - SciPy's wrapper function over LAPACK's linear least-squares solver, using 'gelsd', 'gelsy' (default), or 'gelss' as backend driver

References: ['https://docs.scipy.org/doc/scipy/reference/generated/scipy.linalg.lstsq.html', 'https://www.netlib.org/lapack/lug/node27.html']

Use `suggest_tools()` to check available backend tools.

-----------------------------

Solver-specific parameters: None set

Use `suggest_solver_params()` to check required/optional solver-specific parameters.

# below is optional, as this has already been the default tool under "linear least square"

inv_options.set_tool("scipy.linalg.lstsq")

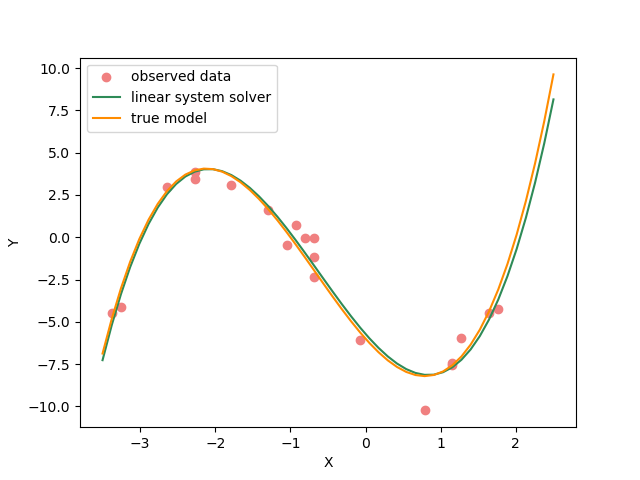

Step 3. Define CoFI Inversion and run#

Our choices so far have defined a linear parameter estimation problem

(without any regularization) to be solved within a least squares

framework. In this case the selection of a matrix solvers method

will mean we are calculating the standard least squares solution

and our choice of backend tool scipy.linalg.lstsq, means that we

will employ scipy’s linalg package to perform the numerics.

Lets run CoFI.

inv = Inversion(inv_problem, inv_options)

inv_result = inv.run()

print(f"The inversion result from `scipy.linalg.lstsq`: {inv_result.model}\n")

inv_result.summary()

The inversion result from `scipy.linalg.lstsq`: [-5.71964359 -5.10903808 1.82553662 0.97472374]

============================

Summary for inversion result

============================

SUCCESS

----------------------------

model: [-5.71964359 -5.10903808 1.82553662 0.97472374]

sum_of_squared_residuals: []

effective_rank: 4

singular_values: [3765.51775745 69.19268194 16.27124488 3.85437889]

model_covariance: [[ 0.19027447 0.05812534 -0.08168411 -0.02550866]

[ 0.05812534 0.08673796 -0.03312809 -0.01812686]

[-0.08168411 -0.03312809 0.05184851 0.01704165]

[-0.02550866 -0.01812686 0.01704165 0.00676031]]

Lets plot the solution.

plot_data()

plot_model(x,jacobian(x).dot(inv_result.model), "linear system solver", color="seagreen")

plot_model(x,true_y, "true model", color="darkorange")

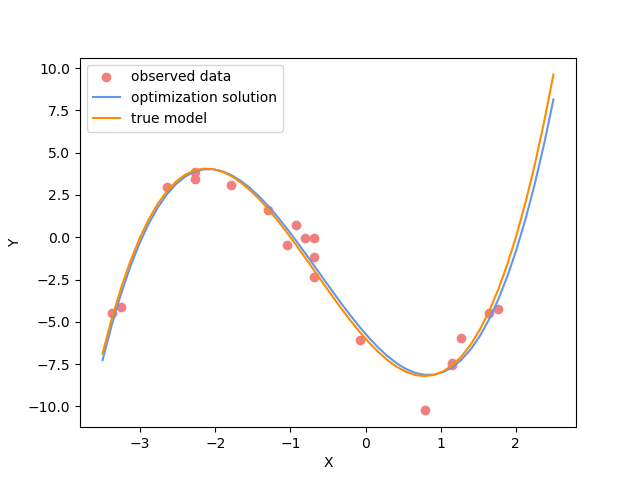

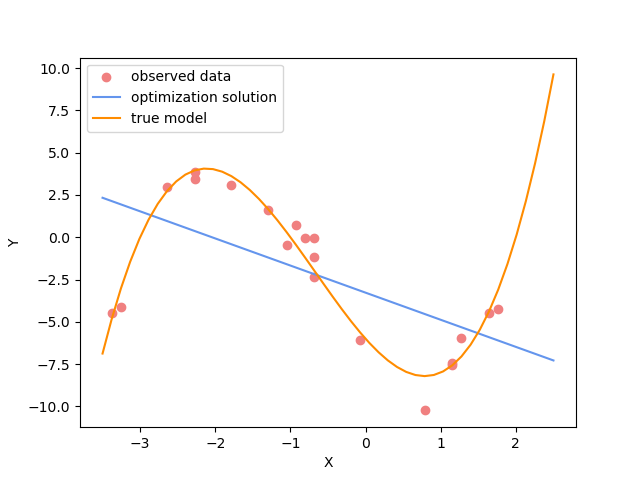

2. Optimizer#

The same overdetermined linear problem, \(\textbf{d} = G\textbf{m}\), with Gaussian data noise can also be solved by minimising the squares of the residual of the linear equations, e.g. \(\textbf{r}^T \textbf{C}_d^{-1}\textbf{r}\) where \(\textbf{r}=\textbf{d}-G\textbf{m}\). The above matrix solver solution gives us the best data fitting model, but a direct optimisation approach could also be used, say when the number of unknowns is large and we do not wish, or are unable to provide the Jacobian function.

So we use a plain optimizer scipy.optimize.minimize to demonstrate

this ability.

######## CoFI BaseProblem - provide additional information

inv_problem.set_initial_model(np.ones(nparams))

inv_problem.set_forward(forward)

inv_problem.set_data_misfit("squared error")

# inv_problem.set_objective(your_own_misfit_function) # (optionally) if you'd like to define your own misfit

# inv_problem.set_gradient(your_own_gradient_of_misfit_function) # (optionally) if you'd like to define your own misfit gradient

######## CoFI InversionOptions - set a different tool

inv_options_2 = InversionOptions()

inv_options_2.set_tool("scipy.optimize.minimize")

inv_options_2.set_params(method="Nelder-Mead")

######## CoFI Inversion - run it

inv_2 = Inversion(inv_problem, inv_options_2)

inv_result_2 = inv_2.run()

######## CoFI InversionResult - check result

print(f"The inversion result from `scipy.optimize.minimize`: {inv_result_2.model}\n")

inv_result_2.summary()

The inversion result from `scipy.optimize.minimize`: [-5.71967431 -5.10913992 1.82556456 0.9747426 ]

============================

Summary for inversion result

============================

SUCCESS

----------------------------

fun: 14.961508008942793

nit: 193

nfev: 330

status: 0

message: Optimization terminated successfully.

final_simplex: (array([[-5.71967431, -5.10913992, 1.82556456, 0.9747426 ],

[-5.71958302, -5.10907158, 1.8255083 , 0.97472628],

[-5.71969118, -5.10911404, 1.82556388, 0.97474474],

[-5.7197282 , -5.10917942, 1.82554925, 0.97474097],

[-5.71960767, -5.10913354, 1.82551338, 0.97473478]]), array([14.96150801, 14.96150804, 14.96150805, 14.9615082 , 14.96150821]))

model: [-5.71967431 -5.10913992 1.82556456 0.9747426 ]

plot_data()

plot_model(x,jacobian(x).dot(inv_result_2.model), "optimization solution", color="cornflowerblue")

plot_model(x,true_y, "true model", color="darkorange")

Challenge: Change the polynomial degree#

Try and replace the 3rd order polynomial with a 1st order polynomial (i.e. \(M=1\)) by adding the required commands below. What does the plot looks like?

Start from code below:

inv_problem = BaseProblem()

inv_problem.set_data(data_y)

inv_problem.set_jacobian(jacobian(n=<CHANGE ME>))

inv_problem.set_data_covariance_inv(Cd_inv())

inv_options.set_solving_method("matrix solvers") # lets decide to use a matrix solver.

inv = Inversion(inv_problem, inv_options)

inv_result = inv.run()

print("Inferred curve with n = <CHANGE ME> ")

plot_data()

plot_model(x,jacobian(x,n=<CHANGE ME>).dot(inv_result.model), "optimization solution", color="cornflowerblue")

plot_model(x,true_y, "true model", color="darkorange")

# Copy the template above, Replace <CHANGE ME> with your answer

#@title Solution

inv_problem = BaseProblem()

inv_problem.set_data(data_y)

inv_problem.set_jacobian(jacobian(n=2))

inv_problem.set_data_covariance_inv(Cd_inv())

inv_options.set_solving_method("matrix solvers") # lets decide to use a matrix solver.

inv = Inversion(inv_problem, inv_options)

inv_result = inv.run()

print("Inferred curve with n = 2 ")

plot_data()

plot_model(x,jacobian(x,n=2).dot(inv_result.model), "optimization solution", color="cornflowerblue")

plot_model(x,true_y, "true model", color="darkorange")

Inferred curve with n = 2

3. Bayesian sampling#

Likelihood#

Since data errors follow a Gaussian in this example, we can define a Likelihood function, \(p({\mathbf d}_{obs}| {\mathbf m})\).

where \({\mathbf d}_{obs}\) represents the observed y values and \({\mathbf d}_{pred}({\mathbf m})\) are those predicted by the polynomial model \(({\mathbf m})\). The Likelihood is defined as the probability of observing the data actually observed, given a model. In practice we usually only need to evaluate the log of the Likelihood, \(\log p({\mathbf d}_{obs} | {\mathbf m})\). To do so, we require the inverse data covariance matrix describing the statistics of the noise in the data, \(C_D^{-1}\) . For this problem the data errors are independent with identical standard deviation in noise for each datum. Hence \(C_D^{-1} = \frac{1}{\sigma^2}I\) where \(\sigma=1\).

Note that the user could specify any appropriate Likelihood function of their choosing here.

Prior#

Bayesian sampling requires a prior probability density function. A common problem with polynomial coefficients as model parameters is that it is not at all obvious what a prior should be. Here we choose a uniform prior with specified bounds

where \(l_i\) and \(u_i\) are lower and upper bounds on the \(i\)th model coefficient.

Here use the uniform distribution with \({\mathbf l}^T = (-10.,-10.,-10.,-10.)\), and \({\mathbf u}^T = (10.,10.,10.,10.)\).

m_lower_bound = np.ones(nparams) * (-10.) # lower bound for uniform prior

m_upper_bound = np.ones(nparams) * 10 # upper bound for uniform prior

def log_prior(model): # uniform distribution

for i in range(len(m_lower_bound)):

if model[i] < m_lower_bound[i] or model[i] > m_upper_bound[i]: return -np.inf

return 0.0 # model lies within bounds -> return log(1)

Note that the user could specify any appropriate Prior PDF of their choosing here.

Bayesian sampling#

In this aproach we sample a probability distribution rather than find a single best fit solution. Bayes’ theorem tells us the the posterior distribution is proportional to the Likelihood and the prior.

where \(K\) is some constant. Under the assumptions specified \(p(\mathbf{m}|\mathbf{d})\) gives a probability density of models that are supported by the data. We seek to draw random samples from \(p(\mathbf{m}|\mathbf{d})\) over model space and then to make inferences from the resulting ensemble of model parameters.

In this example we make use of The Affine Invariant Markov chain Monte Carlo (MCMC) Ensemble sampler Goodman and Weare 2010 to sample the posterior distribution of the model. (See more details about emcee).

Starting points for random walkers#

Now we define some hyperparameters (e.g. the number of walkers and steps), and initialise the starting positions of walkers. We start all walkers in a small ball about a chosen point \((0, 0, 0, 0)\).

nwalkers = 32

ndim = nparams

nsteps = 10000

walkers_start = np.zeros(nparams) + 1e-4 * np.random.randn(nwalkers, ndim)

Add the information and run with CoFI#

######## CoFI BaseProblem - provide additional information

inv_problem.set_log_prior(log_prior)

inv_problem.set_log_likelihood(log_likelihood)

inv_problem.set_model_shape(ndim)

######## CoFI InversionOptions - get a different tool

inv_options_3 = InversionOptions()

inv_options_3.set_tool("emcee") # Here we use to Affine Invariant McMC sampler from Goodman and Weare (2010).

inv_options_3.set_params(nwalkers=nwalkers, nsteps=nsteps, initial_state=walkers_start, progress=True)

######## CoFI Inversion - run it

inv_3 = Inversion(inv_problem, inv_options_3)

inv_result_3 = inv_3.run()

######## CoFI InversionResult - check result

print(f"The inversion result from `emcee`:")

inv_result_3.summary()

0%| | 0/10000 [00:00<?, ?it/s]

1%| | 116/10000 [00:00<00:08, 1152.77it/s]

2%|▏ | 232/10000 [00:00<00:08, 1154.09it/s]

3%|▎ | 348/10000 [00:00<00:08, 1153.15it/s]

5%|▍ | 464/10000 [00:00<00:08, 1152.23it/s]

6%|▌ | 580/10000 [00:00<00:08, 1152.94it/s]

7%|▋ | 696/10000 [00:00<00:08, 1153.92it/s]

8%|▊ | 812/10000 [00:00<00:07, 1154.58it/s]

9%|▉ | 928/10000 [00:00<00:07, 1154.42it/s]

10%|█ | 1044/10000 [00:00<00:07, 1154.81it/s]

12%|█▏ | 1160/10000 [00:01<00:07, 1154.95it/s]

13%|█▎ | 1276/10000 [00:01<00:07, 1152.74it/s]

14%|█▍ | 1392/10000 [00:01<00:07, 1152.77it/s]

15%|█▌ | 1508/10000 [00:01<00:07, 1152.27it/s]

16%|█▌ | 1624/10000 [00:01<00:07, 1153.27it/s]

17%|█▋ | 1740/10000 [00:01<00:07, 1153.65it/s]

19%|█▊ | 1856/10000 [00:01<00:07, 1154.34it/s]

20%|█▉ | 1972/10000 [00:01<00:06, 1154.30it/s]

21%|██ | 2088/10000 [00:01<00:06, 1153.91it/s]

22%|██▏ | 2204/10000 [00:01<00:06, 1153.46it/s]

23%|██▎ | 2320/10000 [00:02<00:06, 1153.33it/s]

24%|██▍ | 2436/10000 [00:02<00:06, 1153.49it/s]

26%|██▌ | 2552/10000 [00:02<00:06, 1154.07it/s]

27%|██▋ | 2668/10000 [00:02<00:06, 1155.02it/s]

28%|██▊ | 2784/10000 [00:02<00:06, 1154.80it/s]

29%|██▉ | 2900/10000 [00:02<00:06, 1154.09it/s]

30%|███ | 3016/10000 [00:02<00:06, 1154.09it/s]

31%|███▏ | 3132/10000 [00:02<00:05, 1154.52it/s]

32%|███▏ | 3248/10000 [00:02<00:05, 1154.23it/s]

34%|███▎ | 3364/10000 [00:02<00:05, 1154.50it/s]

35%|███▍ | 3480/10000 [00:03<00:05, 1155.09it/s]

36%|███▌ | 3596/10000 [00:03<00:05, 1153.45it/s]

37%|███▋ | 3712/10000 [00:03<00:05, 1154.06it/s]

38%|███▊ | 3828/10000 [00:03<00:05, 1153.62it/s]

39%|███▉ | 3944/10000 [00:03<00:05, 1154.14it/s]

41%|████ | 4060/10000 [00:03<00:05, 1153.83it/s]

42%|████▏ | 4176/10000 [00:03<00:05, 1154.10it/s]

43%|████▎ | 4292/10000 [00:03<00:04, 1142.69it/s]

44%|████▍ | 4408/10000 [00:03<00:04, 1145.58it/s]

45%|████▌ | 4524/10000 [00:03<00:04, 1148.15it/s]

46%|████▋ | 4640/10000 [00:04<00:04, 1149.22it/s]

48%|████▊ | 4756/10000 [00:04<00:04, 1150.73it/s]

49%|████▊ | 4872/10000 [00:04<00:04, 1151.58it/s]

50%|████▉ | 4988/10000 [00:04<00:04, 1151.46it/s]

51%|█████ | 5104/10000 [00:04<00:04, 1151.73it/s]

52%|█████▏ | 5220/10000 [00:04<00:04, 1150.77it/s]

53%|█████▎ | 5336/10000 [00:04<00:04, 1151.82it/s]

55%|█████▍ | 5452/10000 [00:04<00:03, 1152.74it/s]

56%|█████▌ | 5568/10000 [00:04<00:03, 1153.38it/s]

57%|█████▋ | 5684/10000 [00:04<00:03, 1153.25it/s]

58%|█████▊ | 5800/10000 [00:05<00:03, 1152.88it/s]

59%|█████▉ | 5916/10000 [00:05<00:03, 1153.01it/s]

60%|██████ | 6032/10000 [00:05<00:03, 1152.99it/s]

61%|██████▏ | 6148/10000 [00:05<00:03, 1149.06it/s]

63%|██████▎ | 6264/10000 [00:05<00:03, 1150.26it/s]

64%|██████▍ | 6380/10000 [00:05<00:03, 1151.74it/s]

65%|██████▍ | 6496/10000 [00:05<00:03, 1152.57it/s]

66%|██████▌ | 6612/10000 [00:05<00:02, 1153.29it/s]

67%|██████▋ | 6728/10000 [00:05<00:02, 1153.36it/s]

68%|██████▊ | 6844/10000 [00:05<00:02, 1153.45it/s]

70%|██████▉ | 6960/10000 [00:06<00:02, 1152.89it/s]

71%|███████ | 7076/10000 [00:06<00:02, 1153.35it/s]

72%|███████▏ | 7192/10000 [00:06<00:02, 1154.02it/s]

73%|███████▎ | 7308/10000 [00:06<00:02, 1153.75it/s]

74%|███████▍ | 7424/10000 [00:06<00:02, 1153.85it/s]

75%|███████▌ | 7540/10000 [00:06<00:02, 1154.15it/s]

77%|███████▋ | 7656/10000 [00:06<00:02, 1153.86it/s]

78%|███████▊ | 7772/10000 [00:06<00:01, 1154.08it/s]

79%|███████▉ | 7888/10000 [00:06<00:01, 1154.07it/s]

80%|████████ | 8004/10000 [00:06<00:01, 1154.53it/s]

81%|████████ | 8120/10000 [00:07<00:01, 1153.80it/s]

82%|████████▏ | 8236/10000 [00:07<00:01, 1153.19it/s]

84%|████████▎ | 8352/10000 [00:07<00:01, 1153.35it/s]

85%|████████▍ | 8468/10000 [00:07<00:01, 1153.79it/s]

86%|████████▌ | 8584/10000 [00:07<00:01, 1154.72it/s]

87%|████████▋ | 8700/10000 [00:07<00:01, 1154.84it/s]

88%|████████▊ | 8816/10000 [00:07<00:01, 1154.93it/s]

89%|████████▉ | 8932/10000 [00:07<00:00, 1155.69it/s]

90%|█████████ | 9048/10000 [00:07<00:00, 1155.54it/s]

92%|█████████▏| 9164/10000 [00:07<00:00, 1155.69it/s]

93%|█████████▎| 9280/10000 [00:08<00:00, 1154.70it/s]

94%|█████████▍| 9396/10000 [00:08<00:00, 1153.92it/s]

95%|█████████▌| 9512/10000 [00:08<00:00, 1153.47it/s]

96%|█████████▋| 9628/10000 [00:08<00:00, 1153.29it/s]

97%|█████████▋| 9744/10000 [00:08<00:00, 1153.35it/s]

99%|█████████▊| 9860/10000 [00:08<00:00, 1147.60it/s]

100%|█████████▉| 9976/10000 [00:08<00:00, 1149.54it/s]

100%|██████████| 10000/10000 [00:08<00:00, 1152.93it/s]

The inversion result from `emcee`:

============================

Summary for inversion result

============================

SUCCESS

----------------------------

sampler: <emcee.ensemble.EnsembleSampler object>

blob_names: ['log_likelihood', 'log_prior']

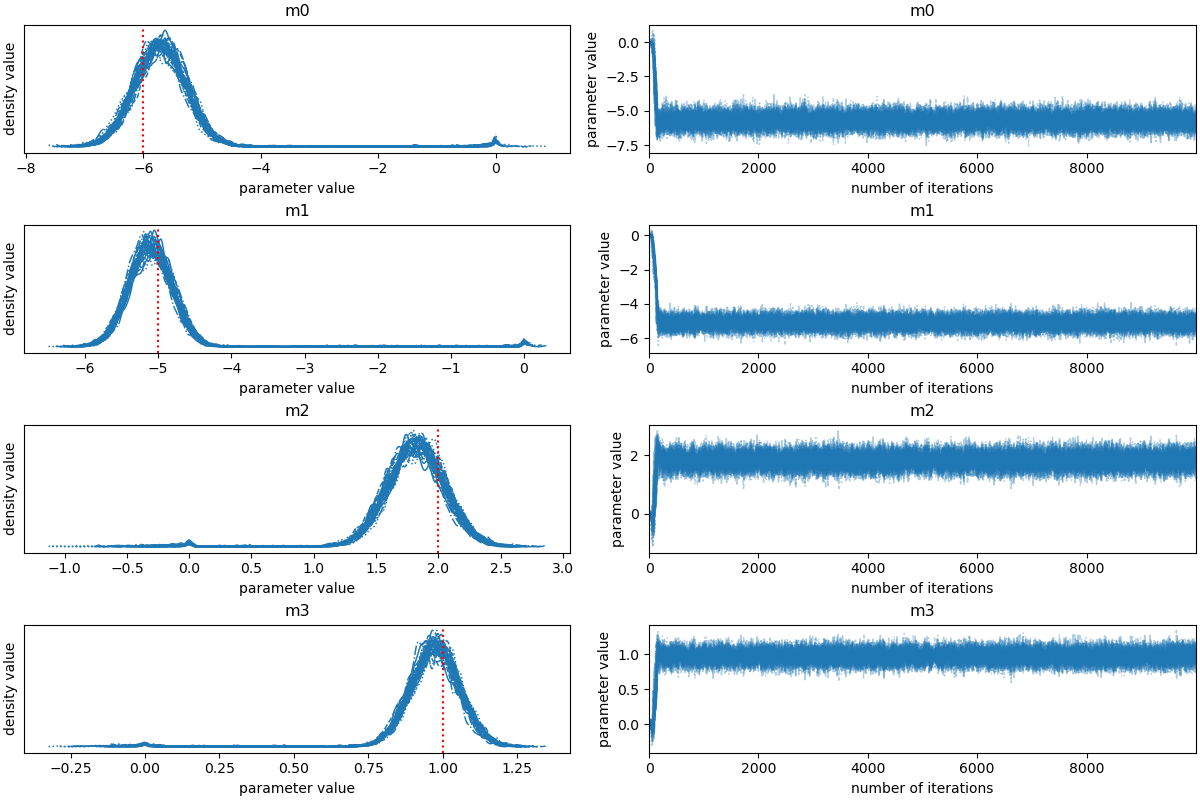

Post-sampling analysis#

By default the raw sampler resulting object is attached to cofi’s

inversion result.

Optionally, you can convert that into an arviz data structure to

have access to a range of analysis functions. (See more details in

arviz

documentation).

import arviz as az

labels = ["m0", "m1", "m2","m3"]

sampler = inv_result_3.sampler

az_idata = az.from_emcee(sampler, var_names=labels)

# az_idata = inv_result_3.to_arviz() # alternatively

az_idata.get("posterior")

# a standard `trace` plot

axes = az.plot_trace(az_idata, backend_kwargs={"constrained_layout":True});

# add legends

for i, axes_pair in enumerate(axes):

ax1 = axes_pair[0]

ax2 = axes_pair[1]

ax1.axvline(true_model[i], linestyle='dotted', color='red')

ax1.set_xlabel("parameter value")

ax1.set_ylabel("density value")

ax2.set_xlabel("number of iterations")

ax2.set_ylabel("parameter value")

autocorrelation time: [ 87.58993647 110.95951699 68.36367543 89.68019852]

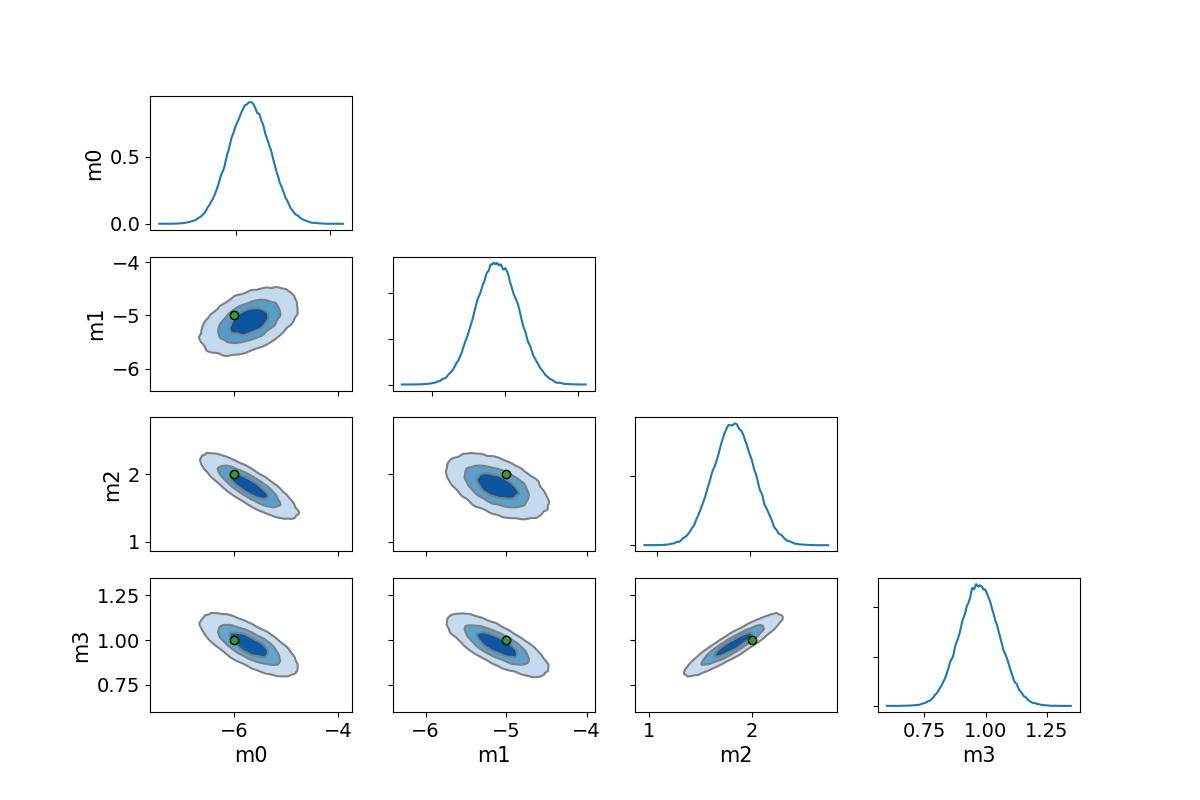

# a Corner plot

fig, axes = plt.subplots(nparams, nparams, figsize=(12,8))

if(False): # if we are plotting the model ensemble use this

az.plot_pair(

az_idata.sel(draw=slice(300,None)),

marginals=True,

reference_values=dict(zip([f"m{i}" for i in range(4)], true_model.tolist())),

ax=axes,

);

else: # if we wish to plot a kernel density plot then use this option

az.plot_pair(

az_idata.sel(draw=slice(300,None)),

marginals=True,

reference_values=dict(zip([f"m{i}" for i in range(4)], true_model.tolist())),

kind="kde",

kde_kwargs={

"hdi_probs": [0.3, 0.6, 0.9], # Plot 30%, 60% and 90% HDI contours

"contourf_kwargs": {"cmap": "Blues"},

},

ax=axes,

);

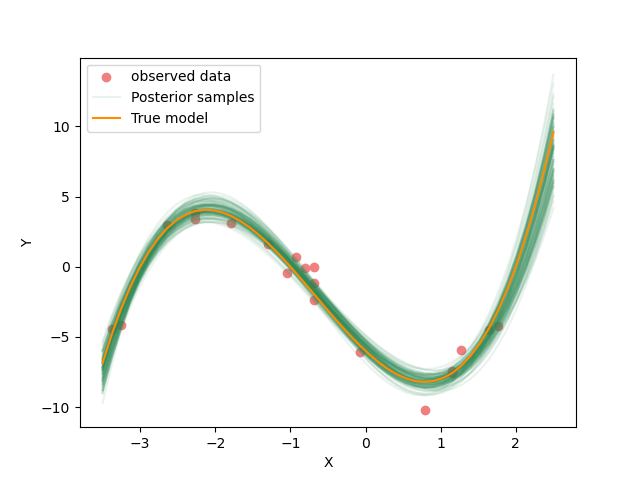

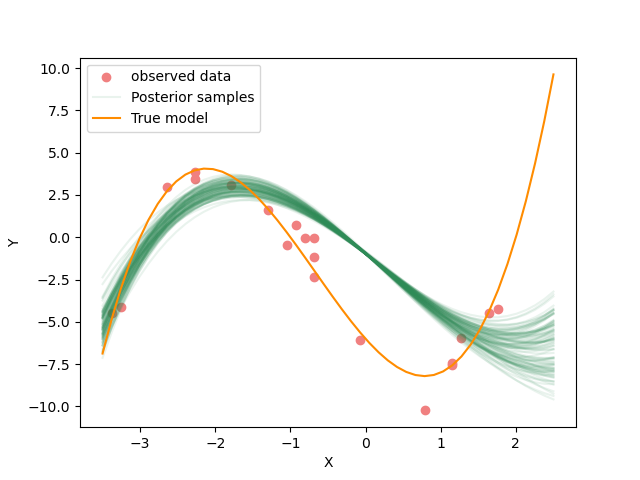

Now we plot the predicted curves for the posterior ensemble of solutions.

flat_samples = sampler.get_chain(discard=300, thin=30, flat=True)

inds = np.random.randint(len(flat_samples), size=100) # get a random selection from posterior ensemble

plot_data()

plot_models(flat_samples[inds])

plot_model(x,true_y, "True model", color="darkorange")

Expected values, credible intervals and model covariance matrix from the ensemble#

print("\n Expected value and 95% credible intervals ")

for i in range(ndim):

mcmc = np.percentile(flat_samples[:, i], [5, 50, 95])

print(" {} {:7.3f} [{:7.3f}, {:7.3f}]".format(labels[i],mcmc[1],mcmc[0],mcmc[2]))

Expected value and 95% credible intervals

m0 -5.713 [ -6.436, -4.994]

m1 -5.112 [ -5.601, -4.631]

m2 1.822 [ 1.445, 2.193]

m3 0.974 [ 0.839, 1.108]

CMpost = np.cov(flat_samples.T)

CM_std= np.std(flat_samples,axis=0)

print('Posterior model covariance matrix\n',CMpost)

print('\n Posterior estimate of model standard deviations in each parameter')

for i in range(ndim):

print(" {} {:7.4f}".format(labels[i],CM_std[i]))

Posterior model covariance matrix

[[ 0.19127786 0.05764904 -0.08160666 -0.02533072]

[ 0.05764904 0.08724585 -0.03311225 -0.01816461]

[-0.08160666 -0.03311225 0.05134851 0.01683258]

[-0.02533072 -0.01816461 0.01683258 0.00668753]]

Posterior estimate of model standard deviations in each parameter

m0 0.4373

m1 0.2954

m2 0.2266

m3 0.0818

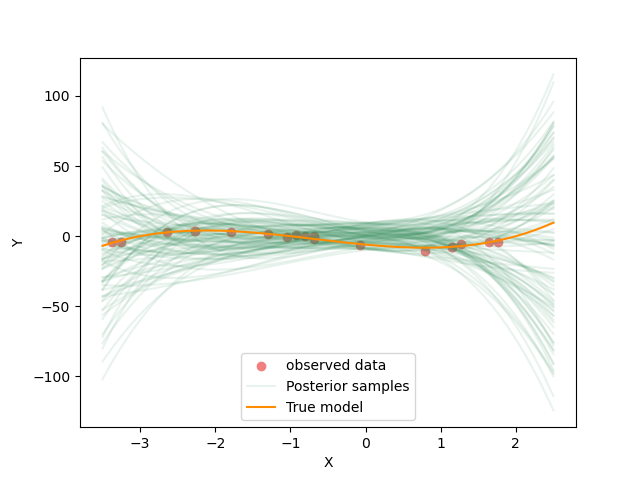

Challenge: Change the prior model bounds#

Replace the previous prior bounds to new values

The original uniform bounds had

\({\mathbf l}^T = (-10.,-10.,-10.,-10.)\), and \({\mathbf u}^T = (10.,10.,10.,10.)\).

Lets replace with

\({\mathbf l}^T = (-1.,-10.,-10.,-10.)\), and \({\mathbf u}^T = (2.,10.,10.,10.)\).

We have only changed the bounds of the first parameter. However since the true value of constant term was 6, these bounds are now inconsistent with the true model.

What does this do to the posterior distribution?

Start from the code template below:

m_lower_bound = <CHANGE ME> # lower bound for uniform prior

m_upper_bound = <CHANGE ME> # upper bound for uniform prior

def log_prior(model): # uniform distribution

for i in range(len(m_lower_bound)):

if model[i] < m_lower_bound[i] or model[i] > m_upper_bound[i]: return -np.inf

return 0.0 # model lies within bounds -> return log(1)

######## CoFI BaseProblem - update information

inv_problem.set_log_prior(log_prior)

######## CoFI Inversion - run it

inv_4 = Inversion(inv_problem, inv_options_3)

inv_result_4 = inv_4.run()

flat_samples = inv_result_4.sampler.get_chain(discard=300, thin=30, flat=True)

inds = np.random.randint(len(flat_samples), size=100) # get a random selection from posterior ensemble

print("Resulting samples with prior model lower bounds of <CHANGE ME>, upper bounds of <CHANGE ME>")

plot_data()

plot_models(flat_samples[inds])

plot_model(x, true_y, "True model", color="darkorange")

# Copy the template above, Replace <CHANGE ME> with your answer

#@title Solution

m_lower_bound = np.array([-1,-10,-10,-10]) # lower bound for uniform prior

m_upper_bound = np.array([2,10,10,10]) # upper bound for uniform prior

def log_prior(model): # uniform distribution

for i in range(len(m_lower_bound)):

if model[i] < m_lower_bound[i] or model[i] > m_upper_bound[i]: return -np.inf

return 0.0 # model lies within bounds -> return log(1)

######## CoFI BaseProblem - update information

inv_problem.set_log_prior(log_prior)

######## CoFI Inversion - run it

inv_4 = Inversion(inv_problem, inv_options_3)

inv_result_4 = inv_4.run()

flat_samples = inv_result_4.sampler.get_chain(discard=300, thin=30, flat=True)

inds = np.random.randint(len(flat_samples), size=100) # get a random selection from posterior ensemble

print("Resulting samples with prior model lower bounds of [-1,-10,-10,-10], upper bounds of [2,10,10,10]")

plot_data()

plot_models(flat_samples[inds])

plot_model(x, true_y, "True model", color="darkorange")

0%| | 0/10000 [00:00<?, ?it/s]

1%| | 117/10000 [00:00<00:08, 1163.76it/s]

2%|▏ | 238/10000 [00:00<00:08, 1189.64it/s]

4%|▎ | 362/10000 [00:00<00:07, 1208.31it/s]

5%|▍ | 485/10000 [00:00<00:07, 1214.27it/s]

6%|▌ | 608/10000 [00:00<00:07, 1218.63it/s]

7%|▋ | 732/10000 [00:00<00:07, 1225.21it/s]

9%|▊ | 855/10000 [00:00<00:07, 1224.43it/s]

10%|▉ | 979/10000 [00:00<00:07, 1226.85it/s]

11%|█ | 1103/10000 [00:00<00:07, 1229.22it/s]

12%|█▏ | 1226/10000 [00:01<00:07, 1226.40it/s]

13%|█▎ | 1349/10000 [00:01<00:07, 1222.96it/s]

15%|█▍ | 1473/10000 [00:01<00:06, 1225.46it/s]

16%|█▌ | 1597/10000 [00:01<00:06, 1228.00it/s]

17%|█▋ | 1720/10000 [00:01<00:06, 1223.67it/s]

18%|█▊ | 1844/10000 [00:01<00:06, 1226.36it/s]

20%|█▉ | 1968/10000 [00:01<00:06, 1227.50it/s]

21%|██ | 2091/10000 [00:01<00:06, 1226.61it/s]

22%|██▏ | 2215/10000 [00:01<00:06, 1228.11it/s]

23%|██▎ | 2338/10000 [00:01<00:06, 1226.50it/s]

25%|██▍ | 2461/10000 [00:02<00:06, 1223.21it/s]

26%|██▌ | 2585/10000 [00:02<00:06, 1227.52it/s]

27%|██▋ | 2708/10000 [00:02<00:05, 1225.42it/s]

28%|██▊ | 2831/10000 [00:02<00:05, 1224.93it/s]

30%|██▉ | 2954/10000 [00:02<00:05, 1223.27it/s]

31%|███ | 3077/10000 [00:02<00:05, 1220.66it/s]

32%|███▏ | 3200/10000 [00:02<00:05, 1222.98it/s]

33%|███▎ | 3323/10000 [00:02<00:05, 1220.57it/s]

34%|███▍ | 3446/10000 [00:02<00:05, 1222.56it/s]

36%|███▌ | 3569/10000 [00:02<00:05, 1221.89it/s]

37%|███▋ | 3692/10000 [00:03<00:05, 1214.62it/s]

38%|███▊ | 3815/10000 [00:03<00:05, 1217.09it/s]

39%|███▉ | 3938/10000 [00:03<00:04, 1220.24it/s]

41%|████ | 4061/10000 [00:03<00:04, 1219.42it/s]

42%|████▏ | 4184/10000 [00:03<00:04, 1222.09it/s]

43%|████▎ | 4307/10000 [00:03<00:04, 1220.74it/s]

44%|████▍ | 4431/10000 [00:03<00:04, 1225.48it/s]

46%|████▌ | 4554/10000 [00:03<00:04, 1224.83it/s]

47%|████▋ | 4677/10000 [00:03<00:04, 1221.85it/s]

48%|████▊ | 4801/10000 [00:03<00:04, 1226.88it/s]

49%|████▉ | 4924/10000 [00:04<00:04, 1226.13it/s]

50%|█████ | 5048/10000 [00:04<00:04, 1227.83it/s]

52%|█████▏ | 5171/10000 [00:04<00:03, 1228.38it/s]

53%|█████▎ | 5294/10000 [00:04<00:03, 1227.04it/s]

54%|█████▍ | 5417/10000 [00:04<00:03, 1227.26it/s]

55%|█████▌ | 5540/10000 [00:04<00:03, 1226.66it/s]

57%|█████▋ | 5663/10000 [00:04<00:03, 1226.48it/s]

58%|█████▊ | 5786/10000 [00:04<00:03, 1223.80it/s]

59%|█████▉ | 5909/10000 [00:04<00:03, 1224.01it/s]

60%|██████ | 6032/10000 [00:04<00:03, 1225.35it/s]

62%|██████▏ | 6155/10000 [00:05<00:03, 1222.78it/s]

63%|██████▎ | 6278/10000 [00:05<00:03, 1222.95it/s]

64%|██████▍ | 6401/10000 [00:05<00:02, 1223.04it/s]

65%|██████▌ | 6525/10000 [00:05<00:02, 1225.64it/s]

66%|██████▋ | 6649/10000 [00:05<00:02, 1227.91it/s]

68%|██████▊ | 6772/10000 [00:05<00:02, 1226.15it/s]

69%|██████▉ | 6895/10000 [00:05<00:02, 1224.96it/s]

70%|███████ | 7018/10000 [00:05<00:02, 1224.62it/s]

71%|███████▏ | 7142/10000 [00:05<00:02, 1228.51it/s]

73%|███████▎ | 7265/10000 [00:05<00:02, 1223.14it/s]

74%|███████▍ | 7389/10000 [00:06<00:02, 1225.65it/s]

75%|███████▌ | 7513/10000 [00:06<00:02, 1227.76it/s]

76%|███████▋ | 7636/10000 [00:06<00:01, 1221.68it/s]

78%|███████▊ | 7759/10000 [00:06<00:01, 1223.02it/s]

79%|███████▉ | 7883/10000 [00:06<00:01, 1225.99it/s]

80%|████████ | 8006/10000 [00:06<00:01, 1226.62it/s]

81%|████████▏ | 8129/10000 [00:06<00:01, 1224.95it/s]

83%|████████▎ | 8252/10000 [00:06<00:01, 1225.67it/s]

84%|████████▍ | 8375/10000 [00:06<00:01, 1225.23it/s]

85%|████████▍ | 8498/10000 [00:06<00:01, 1222.35it/s]

86%|████████▌ | 8621/10000 [00:07<00:01, 1223.58it/s]

87%|████████▋ | 8744/10000 [00:07<00:01, 1225.43it/s]

89%|████████▊ | 8868/10000 [00:07<00:00, 1227.45it/s]

90%|████████▉ | 8991/10000 [00:07<00:00, 1227.79it/s]

91%|█████████ | 9114/10000 [00:07<00:00, 1225.95it/s]

92%|█████████▏| 9237/10000 [00:07<00:00, 1222.79it/s]

94%|█████████▎| 9360/10000 [00:07<00:00, 1222.13it/s]

95%|█████████▍| 9483/10000 [00:07<00:00, 1221.95it/s]

96%|█████████▌| 9607/10000 [00:07<00:00, 1225.38it/s]

97%|█████████▋| 9730/10000 [00:07<00:00, 1225.11it/s]

99%|█████████▊| 9853/10000 [00:08<00:00, 1225.88it/s]

100%|█████████▉| 9976/10000 [00:08<00:00, 1226.04it/s]

100%|██████████| 10000/10000 [00:08<00:00, 1223.90it/s]

Resulting samples with prior model lower bounds of [-1,-10,-10,-10], upper bounds of [2,10,10,10]

Why do you think the posterior distribution looks like this?

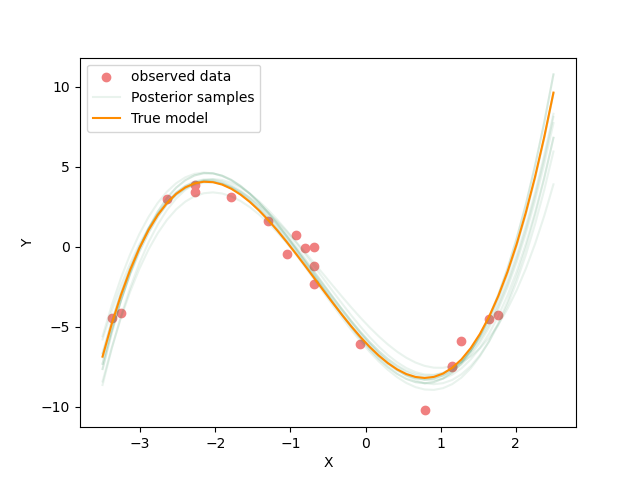

Challenge: Change the data uncertainty#

To change the data uncertainty we increase sigma and then redefine

the log-Likelihood.

Here we increase the assumed data standard deviation by a factor of of 50! So we are telling the inversion that the data are far less accurate than they actually are.

Lets return the prior to the original bounds.

m_lower_bound = np.ones(4) * (-10.) # lower bound for uniform prior

m_upper_bound = np.ones(4) * 10 # upper bound for uniform prior

def log_prior(model): # uniform distribution

for i in range(len(m_lower_bound)):

if model[i] < m_lower_bound[i] or model[i] > m_upper_bound[i]: return -np.inf

return 0.0 # model lies within bounds -> return log(1)

Your challenge is then to tell CoFI that the Likelihood and prior have changed and then to rerun the sample, and plot results.

Feel free to start from the code below:

######## CoFI BaseProblem - update information

inv_problem.set_log_likelihood(<CHANGE ME>)

inv_problem.set_log_prior(<CHANGE ME>)

######## CoFI Inversion - run it

inv_5 = Inversion(inv_problem, inv_options_3)

inv_result_5 = inv_5.run()

flat_samples = inv_result_5.sampler.get_chain(discard=300, thin=30, flat=True)

inds = np.random.randint(len(flat_samples), size=100) # get a random selection from posterior ensemble

print("Resulting samples from changed data uncertainty")

plot_data()

plot_models(flat_samples[inds])

plot_model(x,true_y, "True model", color="darkorange")

# Copy the template above, Replace <CHANGE ME> with your answer

The answer is in the next cells if you want to run them.

#@title Solution

######## CoFI BaseProblem - update information

inv_problem.set_log_likelihood(log_likelihood)

inv_problem.set_log_prior(log_prior)

######## CoFI Inversion - run it

inv_5 = Inversion(inv_problem, inv_options_3)

inv_result_5 = inv_5.run()

flat_samples = inv_result_5.sampler.get_chain(discard=300, thin=30, flat=True)

inds = np.random.randint(len(flat_samples), size=100) # get a random selection from posterior ensemble

print("Resulting samples from changed data uncertainty")

plot_data()

plot_models(flat_samples[inds])

plot_model(x,true_y, "True model", color="darkorange")

0%| | 0/10000 [00:00<?, ?it/s]

1%|▏ | 126/10000 [00:00<00:07, 1255.11it/s]

3%|▎ | 264/10000 [00:00<00:07, 1326.04it/s]

4%|▍ | 402/10000 [00:00<00:07, 1347.53it/s]

5%|▌ | 541/10000 [00:00<00:06, 1361.62it/s]

7%|▋ | 680/10000 [00:00<00:06, 1368.32it/s]

8%|▊ | 818/10000 [00:00<00:06, 1371.88it/s]

10%|▉ | 956/10000 [00:00<00:06, 1370.45it/s]

11%|█ | 1095/10000 [00:00<00:06, 1374.84it/s]

12%|█▏ | 1233/10000 [00:00<00:06, 1370.89it/s]

14%|█▎ | 1372/10000 [00:01<00:06, 1374.03it/s]

15%|█▌ | 1510/10000 [00:01<00:06, 1369.40it/s]

16%|█▋ | 1649/10000 [00:01<00:06, 1374.91it/s]

18%|█▊ | 1789/10000 [00:01<00:05, 1381.09it/s]

19%|█▉ | 1928/10000 [00:01<00:05, 1383.31it/s]

21%|██ | 2067/10000 [00:01<00:05, 1379.06it/s]

22%|██▏ | 2205/10000 [00:01<00:05, 1376.36it/s]

23%|██▎ | 2345/10000 [00:01<00:05, 1381.55it/s]

25%|██▍ | 2484/10000 [00:01<00:05, 1379.79it/s]

26%|██▋ | 2625/10000 [00:01<00:05, 1386.61it/s]

28%|██▊ | 2764/10000 [00:02<00:05, 1386.84it/s]

29%|██▉ | 2903/10000 [00:02<00:05, 1385.63it/s]

30%|███ | 3042/10000 [00:02<00:05, 1380.03it/s]

32%|███▏ | 3181/10000 [00:02<00:04, 1378.08it/s]

33%|███▎ | 3319/10000 [00:02<00:04, 1376.38it/s]

35%|███▍ | 3457/10000 [00:02<00:04, 1376.43it/s]

36%|███▌ | 3595/10000 [00:02<00:04, 1372.03it/s]

37%|███▋ | 3733/10000 [00:02<00:04, 1372.93it/s]

39%|███▊ | 3872/10000 [00:02<00:04, 1375.43it/s]

40%|████ | 4011/10000 [00:02<00:04, 1376.91it/s]

41%|████▏ | 4149/10000 [00:03<00:04, 1374.40it/s]

43%|████▎ | 4288/10000 [00:03<00:04, 1377.72it/s]

44%|████▍ | 4426/10000 [00:03<00:04, 1378.36it/s]

46%|████▌ | 4566/10000 [00:03<00:03, 1382.75it/s]

47%|████▋ | 4705/10000 [00:03<00:03, 1382.10it/s]

48%|████▊ | 4844/10000 [00:03<00:03, 1381.30it/s]

50%|████▉ | 4983/10000 [00:03<00:03, 1377.38it/s]

51%|█████ | 5123/10000 [00:03<00:03, 1383.14it/s]

53%|█████▎ | 5263/10000 [00:03<00:03, 1385.29it/s]

54%|█████▍ | 5402/10000 [00:03<00:03, 1380.28it/s]

55%|█████▌ | 5541/10000 [00:04<00:03, 1378.84it/s]

57%|█████▋ | 5679/10000 [00:04<00:03, 1373.87it/s]

58%|█████▊ | 5818/10000 [00:04<00:03, 1376.21it/s]

60%|█████▉ | 5956/10000 [00:04<00:02, 1370.52it/s]

61%|██████ | 6094/10000 [00:04<00:02, 1372.92it/s]

62%|██████▏ | 6233/10000 [00:04<00:02, 1375.60it/s]

64%|██████▎ | 6372/10000 [00:04<00:02, 1378.69it/s]

65%|██████▌ | 6511/10000 [00:04<00:02, 1380.55it/s]

66%|██████▋ | 6650/10000 [00:04<00:02, 1380.84it/s]

68%|██████▊ | 6789/10000 [00:04<00:02, 1381.15it/s]

69%|██████▉ | 6928/10000 [00:05<00:02, 1377.93it/s]

71%|███████ | 7067/10000 [00:05<00:02, 1380.36it/s]

72%|███████▏ | 7207/10000 [00:05<00:02, 1383.62it/s]

73%|███████▎ | 7346/10000 [00:05<00:01, 1376.59it/s]

75%|███████▍ | 7485/10000 [00:05<00:01, 1379.68it/s]

76%|███████▌ | 7623/10000 [00:05<00:01, 1374.75it/s]

78%|███████▊ | 7762/10000 [00:05<00:01, 1377.02it/s]

79%|███████▉ | 7902/10000 [00:05<00:01, 1382.58it/s]

80%|████████ | 8041/10000 [00:05<00:01, 1378.73it/s]

82%|████████▏ | 8180/10000 [00:05<00:01, 1380.52it/s]

83%|████████▎ | 8320/10000 [00:06<00:01, 1384.65it/s]

85%|████████▍ | 8459/10000 [00:06<00:01, 1384.91it/s]

86%|████████▌ | 8598/10000 [00:06<00:01, 1383.79it/s]

87%|████████▋ | 8737/10000 [00:06<00:00, 1383.42it/s]

89%|████████▉ | 8876/10000 [00:06<00:00, 1379.58it/s]

90%|█████████ | 9017/10000 [00:06<00:00, 1387.07it/s]

92%|█████████▏| 9156/10000 [00:06<00:00, 1386.05it/s]

93%|█████████▎| 9295/10000 [00:06<00:00, 1384.86it/s]

94%|█████████▍| 9434/10000 [00:06<00:00, 1383.75it/s]

96%|█████████▌| 9573/10000 [00:06<00:00, 1384.45it/s]

97%|█████████▋| 9712/10000 [00:07<00:00, 1385.36it/s]

99%|█████████▊| 9851/10000 [00:07<00:00, 1382.80it/s]

100%|█████████▉| 9990/10000 [00:07<00:00, 1382.58it/s]

100%|██████████| 10000/10000 [00:07<00:00, 1377.85it/s]

Resulting samples from changed data uncertainty

Challenge: Change the number of walkers / steps in the McMC algorithm (optional)#

Now lets decrease the number of steps performed by the McMC algorithm. It will be faster but perform less exploration of the model parameters.

We suggest you reduce the number of steps taken by all 32 random walkers and see how it affects the posterior ensemble.

You can start from code template below:

# change number of steps

nsteps = <CHANGE ME> # instead of 10000

# change number of walkers

nwalkers = <CHANGE ME> # instead of 32

walkers_start = np.zeros(nparams) + 1e-4 * np.random.randn(nwalkers, ndim)

# let's return to the old uncertainty settings

sigma = 1.0 # common noise standard deviation

Cdinv = np.eye(len(data_y))/(sigma**2) # inverse data covariance matrix

######## CoFI InversionOptions - get a different tool

inv_options_3.set_params(nsteps=nsteps, nwalkers=nwalkers, initial_state=walkers_start)

######## CoFI Inversion - run it

inv_6 = Inversion(inv_problem, inv_options_3)

inv_result_6 = inv_6.run()

######## CoFI InversionResult - plot result

flat_samples = inv_result_6.sampler.get_chain(discard=300, thin=30, flat=True)

inds = np.random.randint(len(flat_samples), size=10) # get a random selection from posterior ensemble

print(f"Inference results from {nsteps} steps and {nwalkers} walkers")

plot_data()

plot_models(flat_samples[inds])

plot_model(x,true_y, "True model", color="darkorange")

# Copy the template above, Replace <CHANGE ME> with your answer

#@title Solution

# change number of steps

nsteps = 400 # instead of 10000

# change number of walkers

nwalkers = 30 # instead of 32

walkers_start = np.zeros(nparams) + 1e-4 * np.random.randn(nwalkers, ndim)

# let's return to the old uncertainty settings

sigma = 1.0 # common noise standard deviation

Cdinv = np.eye(len(data_y))/(sigma**2) # inverse data covariance matrix

######## CoFI InversionOptions - get a different tool

inv_options_3.set_params(nsteps=nsteps, nwalkers=nwalkers, initial_state=walkers_start)

######## CoFI Inversion - run it

inv_6 = Inversion(inv_problem, inv_options_3)

inv_result_6 = inv_6.run()

######## CoFI InversionResult - plot result

flat_samples = inv_result_6.sampler.get_chain(discard=300, thin=30, flat=True)

inds = np.random.randint(len(flat_samples), size=10) # get a random selection from posterior ensemble

print(f"Inference results from {nsteps} steps and {nwalkers} walkers")

plot_data()

plot_models(flat_samples[inds])

plot_model(x,true_y, "True model", color="darkorange")

0%| | 0/400 [00:00<?, ?it/s]

30%|███ | 121/400 [00:00<00:00, 1202.67it/s]

60%|██████ | 242/400 [00:00<00:00, 1204.07it/s]

91%|█████████ | 363/400 [00:00<00:00, 1204.53it/s]

100%|██████████| 400/400 [00:00<00:00, 1203.61it/s]

Inference results from 400 steps and 30 walkers

Where to next?#

Linear regression with Eustatic Sea-level data - link to notebook

Watermark#

watermark_list = ["cofi", "numpy", "scipy", "matplotlib", "emcee", "arviz"]

for pkg in watermark_list:

pkg_var = __import__(pkg)

print(pkg, getattr(pkg_var, "__version__"))

cofi 0.2.7

numpy 1.24.4

scipy 1.12.0

matplotlib 3.8.3

emcee 3.1.4

arviz 0.17.0

sphinx_gallery_thumbnail_number = -1

Total running time of the script: (0 minutes 29.038 seconds)